Service Mesh with Istio on Kubernetes in 5 steps

In this article, I’m going to show you some basic and more advanced samples that illustrate how to use Istio platform in order to provide communication between microservices deployed on Kubernetes. Following the description on Istio website it is:

An open platform to connect, manage, and secure microservices. Istio provides an easy way to create a network of deployed services with load balancing, service-to-service authentication, monitoring, and more, without requiring any changes in service code.

Istio provides mechanisms for traffic management like request routing, discovery, load balancing, handling failures, and fault injection. Additionally, you may enable istio-auth that provides RBAC (Role-Based Access Control) and Mutual TLS Authentication. In this article, we will discuss only traffic management mechanisms.

Step 1. Installing Istio on Minikube platform

The most comfortable way to test Istio locally on Kubernetes is through Minikube. I have already described how to configure Minikube on your local machine in this article: Microservices with Kubernetes and Docker. When installing Istio on Minikube we should first enable some Minikube’s plugins during startup.

$ minikube start --extra-config=controller-manager.ClusterSigningCertFile="/var/lib/localkube/certs/ca.crt" --extra-config=controller-manager.ClusterSigningKeyFile="/var/lib/localkube/certs/ca.key" --extra-config=apiserver.Admission.PluginNames=NamespaceLifecycle,LimitRanger,ServiceAccount,PersistentVolumeLabel,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota

Istio is installed in a dedicated namespace called istio-system but is able to manage services from all other namespaces. First, you should go to the release page and download the installation file corresponding to your OS. For me it is Windows, and all the next steps will be described with the assumption that we are using exactly this OS. After running Minikube it would be useful to enable Docker on Minikube’s VM. Thanks to that you will be able to execute docker commands.

@FOR /f "tokens=* delims=^L" %i IN ('minikube docker-env') DO @call %i

Now, extract Istio files to your local filesystem. File istioctl.exe, which is available under ${ISTIO_HOME}/bin directory should be added to your PATH. Istio contains some installation files for Kubernetes platform in ${ISTIO_HOME}/install/kubernetes. To install Istio’s core components on Minikube just apply the following YAML definition file.

$ kubectl apply -f install/kubernetes/istio.yaml

Now, you have Istio’s core components deployed on your Minikube instance. These components are:

Envoy – it is an open-source edge and service proxy, designed for cloud-native application. Istio uses an extended version of the Envoy proxy. If you are interested in some details about Envoy and microservices read my article Envoy Proxy with Microservices, that describes how to integrate Envoy gateway with service discovery.

Mixer – it is a platform-independent component responsible for enforcing access control and usage policies across the service mesh.

Pilot – it provides service discovery for the Envoy sidecars, traffic management capabilities for intelligent routing, and resiliency.

The configuration provided inside istio.yaml definition file deploys some pods and services related to the components mentioned above. You can verify the installation using kubectl command or just by visiting Web Dashboard available after executing command minikube dashboard.

Step 2. Building sample applications based on Spring Boot

Before we start configure any traffic rules with Istio, we need to create sample applications that will communicate with each other. These are really simple services. The source code of these applications is available on my GitHub account inside repository sample-istio-services. There are two services: caller-service and callme-service. Both of them expose endpoint ping which prints application’s name and version. Both of these values are taken from Spring Boot build-info file, which is generated during application build. Here’s implementation of endpoint GET /callme/ping.

@RestController

@RequestMapping("/callme")

public class CallmeController {

private static final Logger LOGGER = LoggerFactory.getLogger(CallmeController.class);

@Autowired

BuildProperties buildProperties;

@GetMapping("/ping")

public String ping() {

LOGGER.info("Ping: name={}, version={}", buildProperties.getName(), buildProperties.getVersion());

return buildProperties.getName() + ":" + buildProperties.getVersion();

}

}

And here’s implementation of endpoint GET /caller/ping. It calls GET /callme/ping endpoint using Spring RestTemplate. We are assuming that callme-service is available under address callme-service:8091 on Kubernetes. This service is will be exposed inside Minikube node under port 8091.

@RestController

@RequestMapping("/caller")

public class CallerController {

private static final Logger LOGGER = LoggerFactory.getLogger(CallerController.class);

@Autowired

BuildProperties buildProperties;

@Autowired

RestTemplate restTemplate;

@GetMapping("/ping")

public String ping() {

LOGGER.info("Ping: name={}, version={}", buildProperties.getName(), buildProperties.getVersion());

String response = restTemplate.getForObject("http://callme-service:8091/callme/ping", String.class);

LOGGER.info("Calling: response={}", response);

return buildProperties.getName() + ":" + buildProperties.getVersion() + ". Calling... " + response;

}

}

The sample applications have to be started on a Docker container. Here’s Dockerfile that is responsible for building image with caller-service application.

FROM openjdk:8-jre-alpine

ENV APP_FILE caller-service-1.0.0-SNAPSHOT.jar

ENV APP_HOME /usr/app

EXPOSE 8090

COPY target/$APP_FILE $APP_HOME/

WORKDIR $APP_HOME

ENTRYPOINT ["sh", "-c"]

CMD ["exec java -jar $APP_FILE"]

The similar Dockerfile is available for callme-service. Now, the only thing we have to is to build Docker images.

$ docker build -t piomin/callme-service:1.0 .

$ docker build -t piomin/caller-service:1.0 .

There is also version 2.0.0-SNAPSHOT of callme-service available in branch v2. Switch to this branch, build the whole application, and then build docker image with 2.0 tag. Why we need version 2.0? I’ll describe it in the next section.

$ docker build -t piomin/callme-service:2.0 .

Step 3. Deploying sample applications on Minikube

Before we start deploying our applications on Minikube, let’s take a look at the sample system architecture visible on the following diagram. We are going to deploy callme-service in two versions: 1.0 and 2.0. Application caller-service is just calling callme-service, so I do not know anything about different versions of the target service. If we would like to route traffic between two versions of callme-service in proportions 20% to 80%, we have to configure the proper Istio’s route rule. And also one thing. Because Istio Ingress is not supported on Minikube, we will just Kubernetes Service. If we need to expose it outside the Minikube cluster we should set the type to NodePort.

Let’s proceed to the deployment phase. Here’s deployment definition for callme-service in version 1.0.

apiVersion: v1

kind: Service

metadata:

name: callme-service

labels:

app: callme-service

spec:

type: NodePort

ports:

- port: 8091

name: http

selector:

app: callme-service

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: callme-service

spec:

replicas: 1

template:

metadata:

labels:

app: callme-service

version: v1

spec:

containers:

- name: callme-service

image: piomin/callme-service:1.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8091

Before deploying it on Minikube we have to inject some Istio properties. The command visible below prints a new version of deployment definition enriched with Istio configuration. We may copy it and save as deployment-with-istio.yaml file.

$ istioctl kube-inject -f deployment.yaml

Now, let’s apply the configuration to Kubernetes.

$ kubectl apply -f deployment-with-istio.yaml

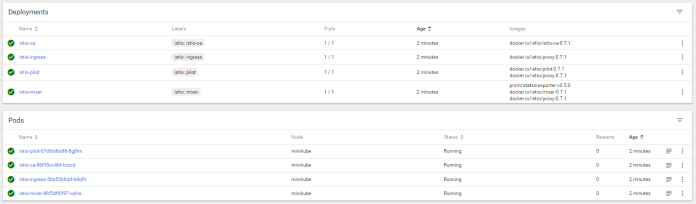

The same steps should be performed for caller-service, and also for version 2.0 of callme-service. All YAML configuration files are committed together with applications and are located in the root directory of every application’s module. If you have successfully deployed all the required components you should see the following elements in your Minikube’s dashboard.

Step 4. Applying Istio routing rules

Istio provides a simple Domain-specific language (DSL) that allows you configure some interesting rules that control how requests are routed within your service mesh. I’m going to show you the following rules:

- Split traffic between different service versions

- Injecting the delay in the request path

- Injecting HTTP error as a reponse from service

Here’s sample route rule definition for callme-service. It splits traffic in proportions 20:80 between versions 1.0 and 2.0 of the service. It also adds 3 seconds delay in 10% of the requests, and returns an HTTP 500 error code for 10% of the requests.

apiVersion: config.istio.io/v1alpha2

kind: RouteRule

metadata:

name: callme-service

spec:

destination:

name: callme-service

route:

- labels:

version: v1

weight: 20

- labels:

version: v2

weight: 80

httpFault:

delay:

percent: 10

fixedDelay: 3s

abort:

percent: 10

httpStatus: 500

Let’s apply a new route rule to Kubernetes.

$ kubectl apply -f routerule.yaml

Now, we can easily verify that rule by executing command istioctl get routerule.

Step 5. Testing the solution

Before we start testing let’s deploy Zipkin on Minikube. Istio provides deployment definition file zipkin.yaml inside directory ${ISTIO_HOME}/install/kubernetes/addons.

$ kubectl apply -f zipkin.yaml

Let’s take a look at the list of services deployed on Minikube. API provided by application caller-service is available under port 30873.

We may easily test the service for a web browser by calling URL http://192.168.99.100:30873/caller/ping. It prints the name and version of the service, and also the name and version of callme-service invoked by caller-service. Because 80% of traffic is routed to version 2.0 of callme-service you will probably see the following response.

However, sometimes version 1.0 of callme-service may be called…

… or Istio can simulate HTTP 500 code.

You can easily analyze traffic statistics with the Zipkin console.

Or just take a look at the logs generated by pods.

Conclusion

This article applies to Istio in version 0.X. For the latest version of Istio read Service Mesh on Kubernetes with Istio and Spring Boot.

5 COMMENTS