Microservices traffic management using Istio on Kubernetes

I have already described a simple example of route configuration between two microservices deployed on Kubernetes in one of my previous articles: Service Mesh with Istio on Kubernetes in 5 steps. You can refer to this article if you are interested in the basic information about Istio, and its deployment on Kubernetes via Minikube. Today we will create some more advanced traffic management rules based on the same sample applications as used in the previous article about Istio.

The source code of sample applications is available on GitHub in repository sample-istio-services (https://github.com/piomin/sample-istio-services.git). There are two sample applications callme-service and caller-service deployed in two different versions 1.0 and 2.0. Version 1.0 is available in branch v1 (https://github.com/piomin/sample-istio-services/tree/v1), while version 2.0 in the branch v2 (https://github.com/piomin/sample-istio-services/tree/v2). Using these sample applications in different versions I’m going to show you different strategies of traffic management depending on a HTTP header set in the incoming requests.

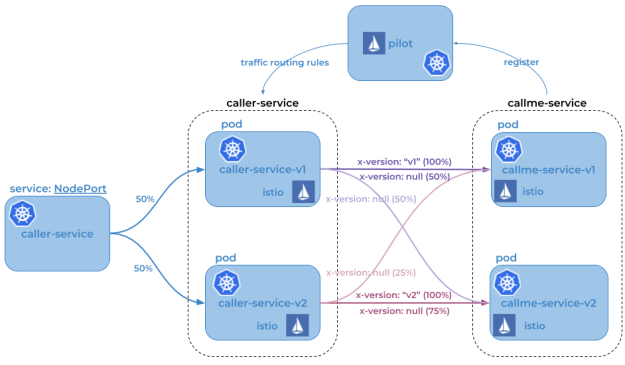

We may force caller-service to route all the requests to the specific version of callme-service by setting header x-version to v1 or v2. We can also not set this header in the request which results in splitting traffic between all existing versions of service. If the request comes to version v1 of caller-service the traffic is splitted 50-50 between two instances of callme-service. If the request is received by v2 instance of caller-service 75% traffic is forwarded to version v2 of callme-service, while only 25% to v1. The scenario described above has been illustrated on the following diagram.

Before we proceed to the example, I should say some words about traffic management with Istio. If you have read my previous article about Istio, you would probably know that each rule is assigned to a destination. Rules control a process of requests routing within a service mesh. The one very important information about them,especially for the purposes of the example illustrated on the diagram above, is that multiple rules can be applied to the same destination. The priority of every rule is determined by the precedence field of the rule. There is one principle related to a value of this field: the higher value of this integer field, the greater priority of the rule. As you may probably guess, if there is more than one rule with the same precedence value the order of rules evaluation is undefined. In addition to a destination, we may also define a source of the request in order to restrict a rule only to a specific caller. If there are multiple deployments of a calling service, we can even filter them out by setting source’s label field. Of course, we can also specify the attributes of an HTTP request such as uri, scheme or headers that are used for matching a request with a defined rule.

Ok, now let’s take a look at the rule with the highest priority. Its name is callme-service-v1 (1). It applies to callme-service (2), and has the highest priority in comparison to other rules (3). It is applied only to requests sent by caller-service (4), that contain HTTP header x-version with value v1 (5). This route rule applies only to version v1 of callme-service (6).

apiVersion: config.istio.io/v1alpha2

kind: RouteRule

metadata:

name: callme-service-v1 # (1)

spec:

destination:

name: callme-service # (2)

precedence: 4 # (3)

match:

source:

name: caller-service # (4)

request:

headers:

x-version:

exact: "v1" # (5)

route:

- labels:

version: v1 # (6)

Here’s the fragment of the first diagram, which is handled by this route rule.

The next rule callme-service-v2 (1) has a lower priority (2). However, it does not conflict with the first rule, because it applies only to the requests containing x-version header with value v2 (3). It forwards all requests to version v2 of callme-service (4).

apiVersion: config.istio.io/v1alpha2

kind: RouteRule

metadata:

name: callme-service-v2 # (1)

spec:

destination:

name: callme-service

precedence: 3 # (2)

match:

source:

name: caller-service

request:

headers:

x-version:

exact: "v2" # (3)

route:

- labels:

version: v2 # (4)

As before, here’s the fragment of the first diagram, which is handled by this route rule.

The rule callme-service-v1-default (1) visible in the code fragment below has a lower priority (2) than two previously described rules. In practice it means that it is executed only if conditions defined in two previous rules were not fulfilled. Such a situation occurs if you do not pass the header x-version inside HTTP request, or it would have different value than v1 or v2. The rule visible below applies only to the instance of service labeled with v1 version (3). Finally, the traffic to callme-service is load balanced in proportions 50-50 between two versions of that service (4).

apiVersion: config.istio.io/v1alpha2

kind: RouteRule

metadata:

name: callme-service-v1-default # (1)

spec:

destination:

name: callme-service

precedence: 2 # (2)

match:

source:

name: caller-service

labels:

version: v1 # (3)

route: # (4)

- labels:

version: v1

weight: 50

- labels:

version: v2

weight: 50

Here’s the fragment of the first diagram, which is handled by this route rule.

The last rule is pretty similar to the previously described callme-service-v1-default. Its name is callme-service-v2-default (1), and it applies only to version v2 of caller-service (3). It has the lowest priority (2), and splits traffic between two version of callme-service in proportions 75-25 in favor of version v2 (4).

apiVersion: config.istio.io/v1alpha2

kind: RouteRule

metadata:

name: callme-service-v2-default # (1)

spec:

destination:

name: callme-service

precedence: 1 # (2)

match:

source:

name: caller-service

labels:

version: v2 # (3)

route: # (4)

- labels:

version: v1

weight: 25

- labels:

version: v2

weight: 75

The same as before, I have also included the diagram illustrating the behaviour of this rule.

All the rules may be placed inside a single file. In that case they should be separated with line ---. This file is available in code’s repository inside callme-service module as multi-rule.yaml. To deploy all defined rules on Kubernetes just execute the following command.

$ kubectl apply -f multi-rule.yaml

After successful deploy you may check out the list of available rules by running command istioctl get routerule.

Before we will start any tests, we obviously need to have sample applications deployed on Kubernetes. These applications are really simple and pretty similar to the applications used for tests in my previous article about Istio. The controller visible below implements method GET /callme/ping, which prints version of application taken from pom.xml and value of x-version HTTP header received in the request.

[code language=”java”]@RestController

@RequestMapping(“/callme”)

public class CallmeController {

private static final Logger LOGGER = LoggerFactory.getLogger(CallmeController.class);

@Autowired

BuildProperties buildProperties;

@GetMapping(“/ping”)

public String ping(@RequestHeader(name = “x-version”, required = false) String version) {

LOGGER.info(“Ping: name={}, version={}, header={}”, buildProperties.getName(), buildProperties.getVersion(), version);

return buildProperties.getName() + “:” + buildProperties.getVersion() + ” with version ” + version;

}

}

Here’s the controller class that implements method GET /caller/ping. It prints a version of caller-service taken from pom.xml and calls method GET callme/ping exposed by callme-service. It needs to include x-version header to the request when sending it to the downstream service.

[code language=”java”]@RestController

@RequestMapping(“/caller”)

public class CallerController {

private static final Logger LOGGER = LoggerFactory.getLogger(CallerController.class);

@Autowired

BuildProperties buildProperties;

@Autowired

RestTemplate restTemplate;

@GetMapping(“/ping”)

public String ping(@RequestHeader(name = “x-version”, required = false) String version) {

LOGGER.info(“Ping: name={}, version={}, header={}”, buildProperties.getName(), buildProperties.getVersion(), version);

HttpHeaders headers = new HttpHeaders();

if (version != null)

headers.set(“x-version”, version);

HttpEntity entity = new HttpEntity(headers);

ResponseEntity response = restTemplate.exchange(“http://callme-service:8091/callme/ping”, HttpMethod.GET, entity, String.class);

return buildProperties.getName() + “:” + buildProperties.getVersion() + “. Calling… ” + response.getBody() + ” with header ” + version;

}

}

Now, we may proceed to applications build and deployment on Kubernetes. Here are are the further steps.

1. Building application

First, switch to branch v1 and build the whole project sample-istio-services by executing mvn clean install command.

2. Building Docker image

The Dockerfiles are placed in the root directory of every application. Build their Docker images by executing the following commands.

$ docker build -t piomin/callme-service:1.0 .

$ docker build -t piomin/caller-service:1.0 .

Alternatively, you may omit this step, because images piomin/callme-service and piomin/caller-service are available on my Docker Hub account.

3. Inject Istio components to Kubernetes deployment file

Kubernetes YAML deployment file is available in the root directory of every application as deployment.yaml. The result of the following command should be saved as a separated file, for example deployment-with-istio.yaml.

$ istioctl kube-inject -f deployment.yaml

4. Deployment on Kubernetes

Finally, you can execute a well-known kubectl command in order to deploy a Docker container with our sample application.

$ kubectl apply -f deployment-with-istio.yaml

Then switch to branch v2, and repeat the steps described above for version 2.0 of the sample applications. The final deployment result is visible in the picture below.

One very useful thing when running Istio on Kubernetes is out-of-the-box integration with such tools like Zipkin, Grafana or Prometheus. Istio automatically sends some metrics, that are collected by Prometheus, for example total number of requests in metric istio_request_count. YAML deployment files for these plugins ara available inside directory ${ISTIO_HOME}/install/kubernetes/addons. Before installing Prometheus using kubectl command I suggest to change service type from default ClusterIP to NodePort by adding the line type: NodePort.

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scrape: 'true'

labels:

name: prometheus

name: prometheus

namespace: istio-system

spec:

type: NodePort

selector:

app: prometheus

ports:

- name: prometheus

protocol: TCP

port: 9090

Then we should run command kubectl apply -f prometheus.yaml in order to deploy Prometheus on Kubernetes. The deployment is available inside istio-system namespace. To check the external port of service run the following command. For me, it is available under address http://192.168.99.100:32293.

In the following diagram visualized using Prometheus I filtered out only the requests sent to callme-service. Green color points to requests received by version v2 of the service, while red color points to requests processed by version v1 of the service. Like you can see in this diagram, in the beginning I have sent the requests to caller-service with HTTP header x-version set to value v2, then I didn’t set this header and traffic has been splitted between to deployed instances of the service. Finally I set it to v1. I defined an expression rate(istio_request_count{callme-service.default.svc.cluster.local}[1m]), which returns per-second rate of requests received by callme-service.

Testing

Before sending some test requests to caller-service we need to obtain its address on Kubernetes. After executing the following command you see that it is available under address http://192.168.99.100:32237/caller/ping.

We have four possible scenarios. First, when we set header x-version to v1 the request will be always routed to callme-service-v1.

If a header x-version is not included in the requests the traffic will be splitted between callme-service-v1…

… and callme-service-v2.

Finally, if we set header x-version to v2 the request will be always routed to callme-service-v2.

Conclusion

Using Istio you can easily create and apply simple and more advanced traffic management rules to the applications deployed on Kubernetes. You can also monitor metrics and traces through the integration between Istio and Zipkin, Prometheus and Grafana.

Leave a ReplyCancel reply