Deploying Spring Cloud Microservices on Hashicorp’s Nomad

Nomad is a little less popular HashiCorp’s cloud product than Consul, Terraform, or Vault. It is also not as popular as competitive software like Kubernetes and Docker Swarm. However, it has its advantages. While Kubernetes is specifically focused on Docker, Nomad is a more general purpose. It supports containerized Docker applications as well as simple applications delivered as an executable JAR files. Besides that, Nomad is architecturally much simpler. It is a single binary, both for clients and servers, and does not require any services for coordination or storage.

In this article I’m going to show you how to install, configure and use Nomad in order to run on it some microservices created in Spring Boot and Spring Cloud frameworks. Let’s move on.

Step 1. Installing and running Nomad

HashiCorp’s Nomad can be easily started on Windows. You just have to download it from the following site https://www.nomadproject.io/downloads.html, and then add nomad.exe file to your PATH. Now you are able to run Nomad commands from your command-line. Let’s begin from starting Nomad agent. For simplicity, we will run it in development mode (-dev). With this option it is acting both as a client and a server. Here’s command that starts the Nomad agent on my local machine.

$ nomad agent -dev -network-interface="WiFi" -consul-address=192.168.99.100:8500

Sometimes you could be required to pass selected network interface as a parameter. We also need to integrate agent node with Consul discovery for the purpose of inter-service communication discussed in the next part of this article. The most suitable way to run Consul on your local machine is through a Docker container. Here’s the command that launches single node Consul discovery server and exposes it on port 8500. If you run Docker on Windows it is probably available under address 192.168.99.100.

$ docker run -d --name consul -p 8500:8500 consul

Step 2. Creating job

Nomad is a tool for managing a cluster of machines and running applications on them. To run the application there we should first create job. Job is the primary configuration unit that users interact with when using Nomad. Job is a specification of tasks that should be ran by Nomad. The job consists of multiple groups, and each group may have multiple tasks.

There are some properties that has to be provided, for example datacenters. You should also set type parameter that indicates scheduler type. I set type service, which is designed for scheduling long lived services that should never go down, like an application exposing HTTP API.

Let’s take a look on Nomad’s job descriptor file. The most important elements of that configuration has been marked by the sequence numbers:

- Property

countspecifies the number of the task groups that should be running under this group. In practice it scales up number of instances of the service started by the task. Here, it has been set to2. - Property

driverspecifies the driver that should be used by Nomad clients to run the task. The driver name corresponds to a technology used for running the application. For example we can setdocker,rktfor containerization solutions orjavafor executing Java applications packaged into a Java JAR file. Here, the property has been set tojava. - After settings the driver we should provide some configuration for this driver in the job spec. There are some options available for

javadriver. But I decided to set the absolute path to the downloaded JAR and some JVM options related to the memory limits. - We may set some requirements for the task including memory, network, CPU, and more. Our task requires max 300 MB of RAM, and enables dynamic port allocation for the port labeled “http”.

- Now, it is required to point out very important thing. When the task is started, it is passed an additional environment variable named

NOMAD_HOST_PORT_httpwhich indicates the host port that the HTTP service is bound to. The suffixhttprelates to the label set for the port. - Property

serviceinside task specifies integrations with Consul for service discovery. Now, Nomad automatically registers a task with the provided name when a task is started and de-registers it when the task dies. As you probably remember, the port number is generated automatically by Nomad. However, I passed the label http to force Nomad to register in Consul with automatically generated port.

job "caller-service" {

datacenters = ["dc1"]

type = "service"

group "caller" {

count = 2 # (1)

task "api" {

driver = "java" # (2)

config { # (3)

jar_path = "C:\\Users\\minkowp\\git\\sample-nomad-java-services\\caller-service\\target\\caller-service-1.0.0-SNAPSHOT.jar"

jvm_options = ["-Xmx256m", "-Xms128m"]

}

resources { # (4)

cpu = 500

memory = 300

network {

port "http" {} # (5)

}

}

service { # (6)

name = "caller-service"

port = "http"

}

}

restart {

attempts = 1

}

}

}

Once we saved the content visible above as job.nomad file, we may apply it to the Nomad node by executing the following command.

nomad job run job.nomad

Step 3. Building sample microservices

Source code of sample applications is available on GitHub in my repository sample-nomad-java-services. There are two simple microservices callme-service and caller-service. I have already use that sample for in the previous articles for showing inter-service communication mechanism. Microservice callme-service does nothing more than exposing endpoint GET /callme/ping that displays service’s name and version.

@RestController

@RequestMapping("/callme")

public class CallmeController {

private static final Logger LOGGER = LoggerFactory.getLogger(CallmeController.class);

@Autowired

BuildProperties buildProperties;

@GetMapping("/ping")

public String ping() {

LOGGER.info("Ping: name={}, version={}", buildProperties.getName(), buildProperties.getVersion());

return buildProperties.getName() + ":" + buildProperties.getVersion();

}

}

Implementation of caller-service endpoint is a little bit more complicated. First, we have to connect our service with Consul in order to fetch a list of registered instances of callme-service. Because we use Spring Boot for creating sample microservices, the most suitable way to enable Consul client is through the Spring Cloud Consul library.

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-starter-consul-discovery</artifactId>

</dependency>

We should override auto-configured connection settings in application.yml. In addition to host and property we have also set spring.cloud.consul.discovery.register property to false. We don’t want discovery client to register application in Consul after startup, because it has been already performed by Nomad.

spring:

application:

name: caller-service

cloud:

consul:

host: 192.168.99.100

port: 8500

discovery:

register: false

Then we should enable Spring Cloud discovery client and RestTemplate load balancer in the main class of application.

@SpringBootApplication

@EnableDiscoveryClient

public class CallerApplication {

public static void main(String[] args) {

SpringApplication.run(CallerApplication.class, args);

}

@Bean

@LoadBalanced

RestTemplate restTemplate() {

return new RestTemplate();

}

}

Finally, we can implement method GET /caller/ping that call endpoint exposed by callme-service.

@RestController

@RequestMapping("/caller")

public class CallerController {

private static final Logger LOGGER = LoggerFactory.getLogger(CallerController.class);

@Autowired

BuildProperties buildProperties;

@Autowired

RestTemplate restTemplate;

@GetMapping("/ping")

public String ping() {

LOGGER.info("Ping: name={}, version={}", buildProperties.getName(), buildProperties.getVersion());

String response = restTemplate.getForObject("http://callme-service/callme/ping", String.class);

LOGGER.info("Calling: response={}", response);

return buildProperties.getName() + ":" + buildProperties.getVersion() + ". Calling... " + response;

}

}

As you probably remember the port of application is automatically generated by Nomad during task execution. It passes an additional environment variable named NOMAD_HOST_PORT_http to the application. Now, this environment variable should be configured inside application.yml file as the value of server.port property.

server:

port: ${NOMAD_HOST_PORT_http:8090}

The last step is to build the whole project sample-nomad-java-services with mvn clean install command.

Step 4. Using Nomad web console

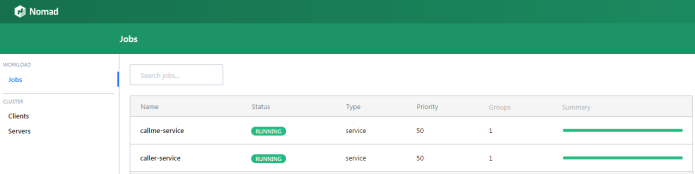

During two previous steps, we have created, build, and deployed our sample applications on Nomad. Now, we should verify the installation. We can do it using CLI or by visiting the web console provided by Nomad. The web console is available under address http://localhost:4646.

In the main site of the web console, we may see the summary of existing jobs. If everything goes fine field status is equal to RUNNING and bar Summary is green.

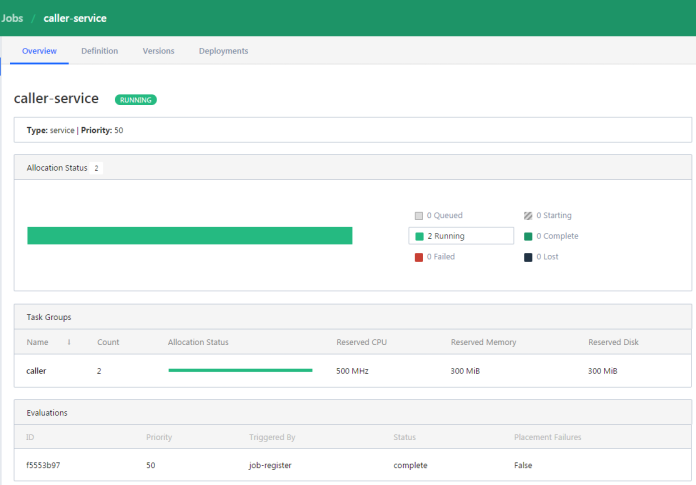

We can display the details of every job on the list. The next screen shows the history of the job, reserved resources, and the number of running instances (tasks).

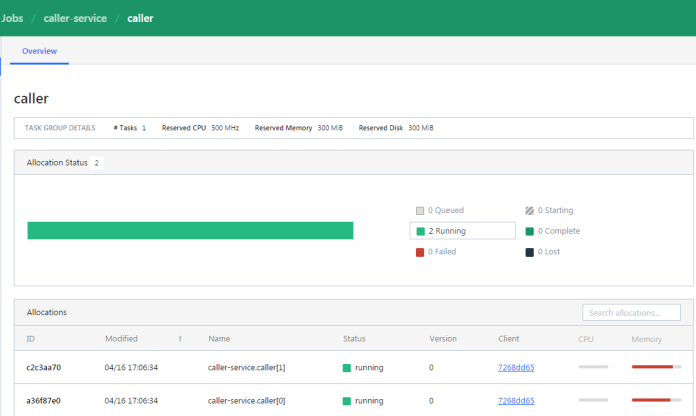

If you would like to check out the details related to the single task, you should navigate to Task Group details.

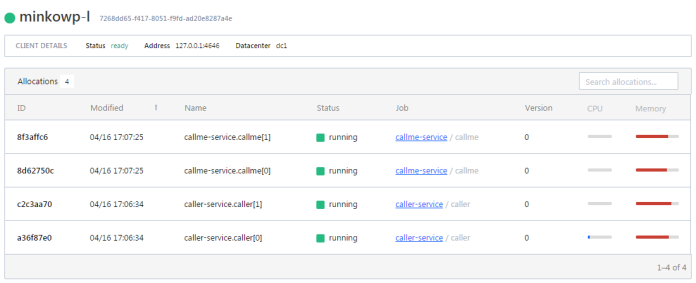

We may also display the details related to the client node.

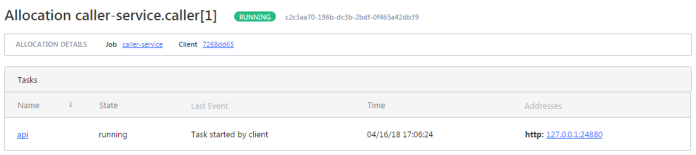

To display the details of allocation select the row in the table. You would be redirected to the following site. You may check out there an IP address of the application instance.

Step 5. Testing a sample system

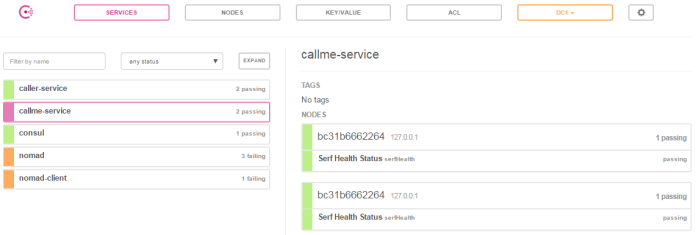

Assuming you have succesfully deployed the applications on Nomad you should see the following services registered in Consul.

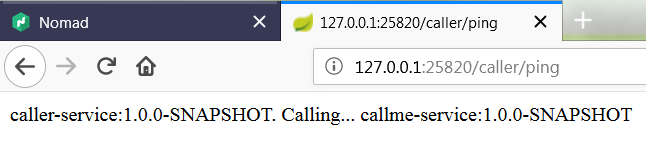

Now, if you call one of two available instances of caller-service, you should see the following response. The address of callme-service instance has been successfully fetched from Consul through Spring Cloud Consul Client.

Leave a Reply