How to setup Continuous Delivery environment

I have already read some interesting articles and books about Continuous Delivery, because I had to setup it inside my organization. The last document about this subject I can recommend is DZone Guide to DevOps. If you interested in this area of software development it can be really enlightening reading for you. The main purpose of my article is to show rather practical site of Continuous Delivery – tools which can be used to build such environment. I’m going to show how to build professional Continuous Delivery environment using:

- Jenkins – most popular open source automation server

- GitLab – web-based Git repository manager

- Artifactory – open source Maven repository manager

- Ansible – simple open source automation engine

- SonarQube – open source platform for continuous code quality

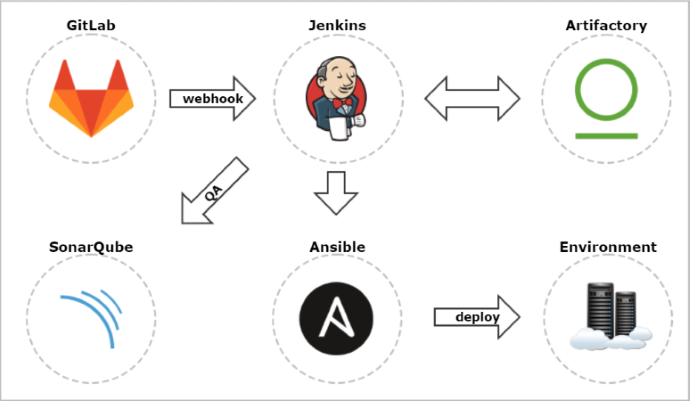

Here’s picture showing our continuous delivery environment.

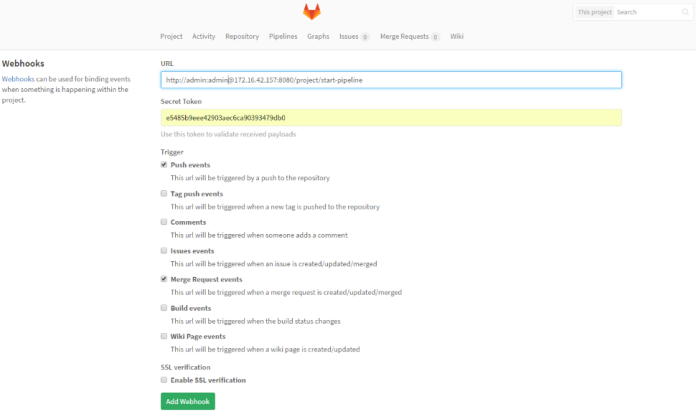

The changes pushed to Git repository managed by GitLab server are automatically propagated to Jenkins using webhook. We enable push and merge request triggers. SSL verification will be disabled. In the URL field we have to put jenkins pipeline address with authentication credentials (user and password) and secret token. This API token which is visible in jenkins user profile under Configure tab.

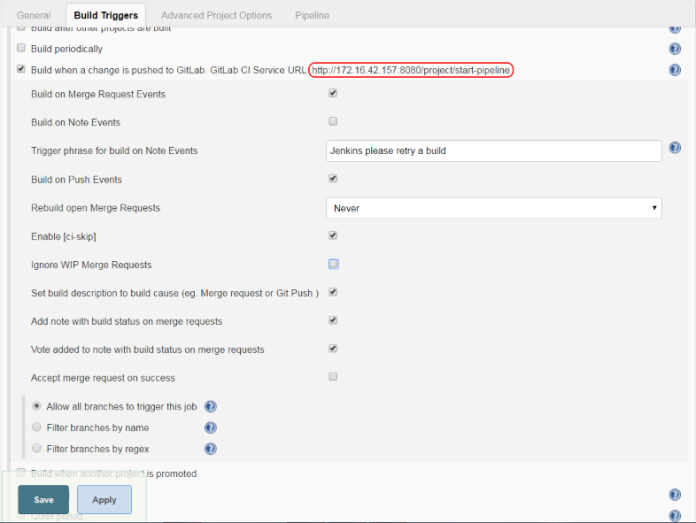

Here’s jenkins pipeline configuration in ‘Build triggers’ section. We have to enable option ‘Build when a change is pushed to GitLab‘. GitLab CI Service URL is the address we have already set in GitLab webhook configuration. There are push and merge request enabled from all branches. It can also be added additional restriction for branch filtering: by name or by regex. To support such kind of trigger in jenkins you need have Gitlab plugin installed.

Here’s jenkins pipeline configuration in ‘Build triggers’ section. We have to enable option ‘Build when a change is pushed to GitLab‘. GitLab CI Service URL is the address we have already set in GitLab webhook configuration. There are push and merge request enabled from all branches. It can also be added additional restriction for branch filtering: by name or by regex. To support such kind of trigger in jenkins you need have Gitlab plugin installed.

There are two options of events which trigger jenkins build:

- push – change in source code is pushed to git repository

- merge request – change in source code is pushed to one branch and then committer creates merge request to the build branch from GitLab management console

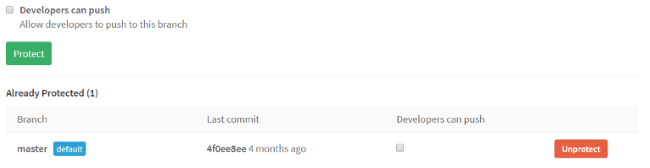

In case you would like to use first option you have to disable build branch protection to enable direct push to that branch. In case of using merge request branch protection need to be activated.

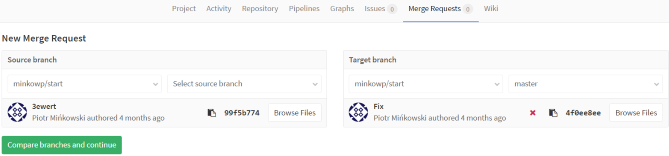

Merge request from GitLab console is very intuitive. Under section ‘Merge request’ we are selecting source and target branch and confirm action.

Ok, many words about GitLab and Jenkins integration… Now you know how to configure it. You only have to decide if you prefer push or merge request in your continuous delivery configuration. Merge request is used for code review in Gitlab – so it is useful additional step in your continuous pipeline. Let’s move on. We have to install some other plugins in jenkins to integrate it with Artifactory, SonarQube and Ansible. Here’s the full list of jenkins plugins I used for continuous delivery process inside my organization:

Here’s configuration on my jenkins pipeline for sample maven project.

[code]

node {

withEnv(["PATH+MAVEN=${tool ‘Maven3’}bin"]) {

stage(‘Checkout’) {

def branch = env.gitlabBranch

env.branch = branch

git url: ‘http://172.16.42.157/minkowp/start.git’, credentialsId: ‘5693747c-2f45-4557-ada2-a1da9bbfe0af’, branch: branch

}

stage(‘Test’) {

def pom = readMavenPom file: ‘pom.xml’

print "Build: " + pom.version

env.POM_VERSION = pom.version

sh ‘mvn clean test -Dmaven.test.failure.ignore=true’

junit ‘**/target/surefire-reports/TEST-*.xml’

currentBuild.description = "v${pom.version} (${env.branch})"

}

stage(‘QA’) {

withSonarQubeEnv(‘sonar’) {

sh ‘mvn org.sonarsource.scanner.maven:sonar-maven-plugin:3.2:sonar’

}

}

stage(‘Build’) {

def server = Artifactory.server "server1"

def buildInfo = Artifactory.newBuildInfo()

def rtMaven = Artifactory.newMavenBuild()

rtMaven.tool = ‘Maven3′

rtMaven.deployer releaseRepo:’libs-release-local’, snapshotRepo:’libs-snapshot-local’, server: server

rtMaven.resolver releaseRepo:’remote-repos’, snapshotRepo:’remote-repos’, server: server

rtMaven.run pom: ‘pom.xml’, goals: ‘clean install -Dmaven.test.skip=true’, buildInfo: buildInfo

publishBuildInfo server: server, buildInfo: buildInfo

}

stage(‘Deploy’) {

dir(‘ansible’) {

ansiblePlaybook playbook: ‘preprod.yml’

}

mail from: ‘ci@example.com’, to: ‘piotr.minkowski@play.pl’, subject: "Nowa wersja start: ‘${env.POM_VERSION}’", body: "Wdrożono nowa wersję start ‘${env.POM_VERSION}’ na środowisku preprodukcyjnym."

}

}

}

[/code]

There are five stages in my pipeline:

- Checkout – source code checkout from git branch. Branch name is sent as parameter by GitLab webhook

- Test – running JUnit test and creating test report visible in jenkins and changing job description

- QA – running source code scanning using SonarQube scanner

- Build – build package resolving artifacts from Artifactory and publishing new application release to Artifactory

- Deploy – deploying application package and configuration on server using ansible

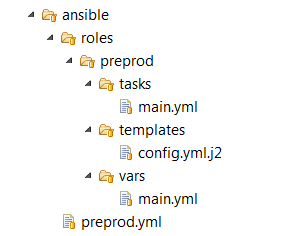

Following Ansible website it is a simple automation language that can perfectly describe an IT application infrastructure. It’s easy-to-learn, self-documenting, and doesn’t require a grad-level computer science degree to read. Ansible using SSH keys to authenticate on the remote host. So you have to put your SSH key to authorized_keys file in the remote host before running ansible commands on it. The main idea of that that is to create playbook with set of ansible commands. Playbooks are Ansible’s configuration, deployment, and orchestration language. They can describe a policy you want your remote systems to enforce, or a set of steps in a general IT process. Here is catalog structure with ansible configuration for application deploy.

Here’s my ansible playbook code. It defines remote host, user to connect and role name. This file is used inside jenkins pipeline on ansiblePlaybook step.

[code]

—

– hosts: pBPreprod

remote_user: default

roles:

– preprod

[/code]

Here’s main.yml file where we define set of ansible commands to on remote server.

[code]

—

– block:

– name: Copy configuration file

template: src=config.yml.j2 dest=/opt/start/config.yml

– name: Copy jar file

copy: src=../target/start.jar dest=/opt/start/start.jar

– name: Run jar file

shell: java -jar /opt/start/start.jar

[/code]

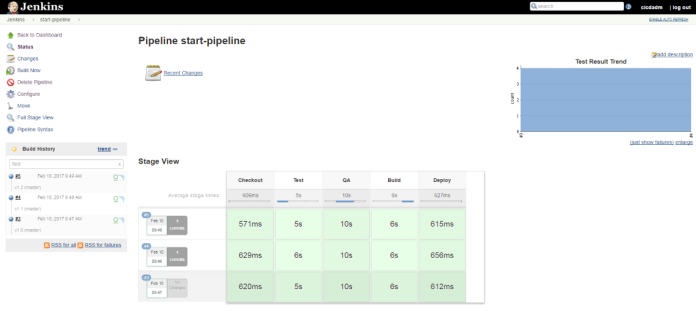

You can check out build results on jenkins console. There is also fine pipeline visualization with stage execution time. Each build history record has link to Artifactory build information and SonarQube scanner report.

0 COMMENTS