Integration tests on OpenShift using Arquillian Cube and Istio

In this tutorial I’ll show you how to use the Arquillian Cube OpenShift extension. Building integration tests for applications deployed on Kubernetes/OpenShift platforms seems to be quite a big challenge. With Arquillian Cube, an Arquillian extension for managing Docker containers, it is not complicated. Kubernetes extension, being a part of Arquillian Cube, helps you write and run integration tests for your Kubernetes/Openshift application. It is responsible for creating and managing temporary namespace for your tests, applying all Kubernetes resources required to setup your environment and once everything is ready it will just run defined integration tests.

The one very good information related to the Arquillian Cube OpenShift is that it supports the Istio framework. You can apply Istio resources before executing tests. One of the most important features of Istio is an ability to control traffic behavior with rich routing rules, retries, delays, failovers, and fault injection. It allows you to test some unexpected situations during network communication between microservices like server errors or timeouts.

If you would like to run some tests using Istio resources on Minishift you should first install it on your platform. To do that you need to change some privileges for your OpenShift user. Let’s do that.

1. Enabling Istio on Minishift

Istio requires some high-level privileges to be able to run on OpenShift. To add those privileges to the current user we need to login as an user with a cluster admin role. First, we should enable admin-user addon on Minishift by executing the following command.

$ minishift addons enable admin-user

After that you would be able to login as system:admin user, which has a cluster-admin role. With this user you can also add cluster-admin role to other users, for example admin. Let’s do that.

$ oc login -u system:admin

$ oc adm policy add-cluster-role-to-user cluster-admin admin

$ oc login -u admin -p admin

Now, let’s create a new project dedicated especially for Istio and then add some required privileges.

$ oc new-project istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-ingress-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z default -n istio-system

$ oc adm policy add-scc-to-user anyuid -z prometheus -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-egressgateway-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-citadel-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-ingressgateway-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-cleanup-old-ca-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-mixer-post-install-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-mixer-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-pilot-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-sidecar-injector-service-account -n istio-system

$ oc adm policy add-scc-to-user anyuid -z istio-galley-service-account -n istio-system

$ oc adm policy add-scc-to-user privileged -z default -n myproject

Finally, we may proceed to Istio components installation. I downloaded the current newest version of Istio – 1.0.1. Installation file is available under install/kubernetes directory. You just have to apply it to your Minishift instance by calling oc apply command.

$ oc apply -f install/kubernetes/istio-demo.yaml

2. Enabling Istio for Arquillian Cube OpenShift

I have already described how to use Arquillian Cube to run tests with OpenShift in the article Testing microservices on OpenShift using Arquillian Cube. In comparison with the sample described in that article we need to include dependency responsible for enabling Istio features.

<dependency>

<groupId>org.arquillian.cube</groupId>

<artifactId>arquillian-cube-istio-kubernetes</artifactId>

<version>1.17.1</version>

<scope>test</scope>

</dependency>

Now, we can use @IstioResource annotation to apply Istio resources into OpenShift cluster or IstioAssistant bean to be able to use some additional methods for adding, removing resources programmatically or polling an availability of URLs.

Let’s take a look at the following JUnit test class using Arquillian Cube OpenShift with Istio support. In addition to the standard test created for running on the OpenShift instance I have added Istio resource file customer-to-account-route.yaml. Then I have invoked the method await provided by IstioAssistant. First test test1CustomerRoute creates new customer, so it needs to wait until customer-route is deployed on OpenShift. The next test test2AccountRoute adds an account for the newly created customer, so it needs to wait until account-route is deployed on OpenShift. Finally, the test test3GetCustomerWithAccounts is run, which calls the method responsible for finding a customer by id with a list of accounts. In that case customer-service calls method endpoint by account-service. As you have probably found out the last line of that test method contains an assertion to an empty list of accounts: Assert.assertTrue(c.getAccounts().isEmpty()). Why? We will simulate the timeout in communication between customer-service and account-service using Istio rules.

@Category(RequiresOpenshift.class)

@RequiresOpenshift

@Templates(templates = {

@Template(url = "classpath:account-deployment.yaml"),

@Template(url = "classpath:deployment.yaml")

})

@RunWith(ArquillianConditionalRunner.class)

@IstioResource("classpath:customer-to-account-route.yaml")

@FixMethodOrder(MethodSorters.NAME_ASCENDING)

public class IstioRuleTest {

private static final Logger LOGGER = LoggerFactory.getLogger(IstioRuleTest.class);

private static String id;

@ArquillianResource

private IstioAssistant istioAssistant;

@RouteURL(value = "customer-route", path = "/customer")

private URL customerUrl;

@RouteURL(value = "account-route", path = "/account")

private URL accountUrl;

@Test

public void test1CustomerRoute() {

LOGGER.info("URL: {}", customerUrl);

istioAssistant.await(customerUrl, r -> r.isSuccessful());

LOGGER.info("URL ready. Proceeding to the test");

OkHttpClient httpClient = new OkHttpClient();

RequestBody body = RequestBody.create(MediaType.parse("application/json"), "{\"name\":\"John Smith\", \"age\":33}");

Request request = new Request.Builder().url(customerUrl).post(body).build();

try {

Response response = httpClient.newCall(request).execute();

ResponseBody b = response.body();

String json = b.string();

LOGGER.info("Test: response={}", json);

Assert.assertNotNull(b);

Assert.assertEquals(200, response.code());

Customer c = Json.decodeValue(json, Customer.class);

this.id = c.getId();

} catch (IOException e) {

e.printStackTrace();

}

}

@Test

public void test2AccountRoute() {

LOGGER.info("Route URL: {}", accountUrl);

istioAssistant.await(accountUrl, r -> r.isSuccessful());

LOGGER.info("URL ready. Proceeding to the test");

OkHttpClient httpClient = new OkHttpClient();

RequestBody body = RequestBody.create(MediaType.parse("application/json"), "{\"number\":\"01234567890\", \"balance\":10000, \"customerId\":\"" + this.id + "\"}");

Request request = new Request.Builder().url(accountUrl).post(body).build();

try {

Response response = httpClient.newCall(request).execute();

ResponseBody b = response.body();

String json = b.string();

LOGGER.info("Test: response={}", json);

Assert.assertNotNull(b);

Assert.assertEquals(200, response.code());

} catch (IOException e) {

e.printStackTrace();

}

}

@Test

public void test3GetCustomerWithAccounts() {

String url = customerUrl + "/" + id;

LOGGER.info("Calling URL: {}", customerUrl);

OkHttpClient httpClient = new OkHttpClient();

Request request = new Request.Builder().url(url).get().build();

try {

Response response = httpClient.newCall(request).execute();

String json = response.body().string();

LOGGER.info("Test: response={}", json);

Assert.assertNotNull(response.body());

Assert.assertEquals(200, response.code());

Customer c = Json.decodeValue(json, Customer.class);

Assert.assertTrue(c.getAccounts().isEmpty());

} catch (IOException e) {

e.printStackTrace();

}

}

}

3. Creating Istio rules

One of the interesting features provided by Istio is an availability of injecting faults to the route rules. we can specify one or more faults to inject while forwarding HTTP requests to the rule’s corresponding request destination. The faults can be either delays or aborts. We can define a percentage level of error using the percent field for both types of fault. In the following Istio resource I have defined a 2 seconds delay for every single request sent to account-service.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: account-service

spec:

hosts:

- account-service

http:

- fault:

delay:

fixedDelay: 2s

percent: 100

route:

- destination:

host: account-service

subset: v1

Besides VirtualService we also need to define DestinationRule for account-service. It is really simple – we have just defined the version label of the target service.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: account-service

spec:

host: account-service

subsets:

- name: v1

labels:

version: v1

Before running the test we should also modify OpenShift deployment templates of our sample applications. We need to inject some Istio resources into the pods definition using istioctl kube-inject command as shown below.

$ istioctl kube-inject -f deployment.yaml -o customer-deployment-istio.yaml

$ istioctl kube-inject -f account-deployment.yaml -o account-deployment-istio.yaml

Finally, we may rewrite generated files into OpenShift templates. Here’s the fragment of the Openshift template containing DeploymentConfig definition for account-service.

kind: Template

apiVersion: v1

metadata:

name: account-template

objects:

- kind: DeploymentConfig

apiVersion: v1

metadata:

name: account-service

labels:

app: account-service

name: account-service

version: v1

spec:

template:

metadata:

annotations:

sidecar.istio.io/status: '{"version":"364ad47b562167c46c2d316a42629e370940f3c05a9b99ccfe04d9f2bf5af84d","initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["istio-envoy","istio-certs"],"imagePullSecrets":null}'

name: account-service

labels:

app: account-service

name: account-service

version: v1

spec:

containers:

- env:

- name: DATABASE_NAME

valueFrom:

secretKeyRef:

key: database-name

name: mongodb

- name: DATABASE_USER

valueFrom:

secretKeyRef:

key: database-user

name: mongodb

- name: DATABASE_PASSWORD

valueFrom:

secretKeyRef:

key: database-password

name: mongodb

image: piomin/account-vertx-service

name: account-vertx-service

ports:

- containerPort: 8095

resources: {}

- args:

- proxy

- sidecar

- --configPath

- /etc/istio/proxy

- --binaryPath

- /usr/local/bin/envoy

- --serviceCluster

- account-service

- --drainDuration

- 45s

- --parentShutdownDuration

- 1m0s

- --discoveryAddress

- istio-pilot.istio-system:15007

- --discoveryRefreshDelay

- 1s

- --zipkinAddress

- zipkin.istio-system:9411

- --connectTimeout

- 10s

- --statsdUdpAddress

- istio-statsd-prom-bridge.istio-system:9125

- --proxyAdminPort

- "15000"

- --controlPlaneAuthPolicy

- NONE

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: INSTANCE_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: ISTIO_META_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: ISTIO_META_INTERCEPTION_MODE

value: REDIRECT

image: gcr.io/istio-release/proxyv2:1.0.1

imagePullPolicy: IfNotPresent

name: istio-proxy

resources:

requests:

cpu: 10m

securityContext:

readOnlyRootFilesystem: true

runAsUser: 1337

volumeMounts:

- mountPath: /etc/istio/proxy

name: istio-envoy

- mountPath: /etc/certs/

name: istio-certs

readOnly: true

initContainers:

- args:

- -p

- "15001"

- -u

- "1337"

- -m

- REDIRECT

- -i

- '*'

- -x

- ""

- -b

- 8095,

- -d

- ""

image: gcr.io/istio-release/proxy_init:1.0.1

imagePullPolicy: IfNotPresent

name: istio-init

resources: {}

securityContext:

capabilities:

add:

- NET_ADMIN

volumes:

- emptyDir:

medium: Memory

name: istio-envoy

- name: istio-certs

secret:

optional: true

secretName: istio.default

4. Building applications with Vert.x framework

The sample applications are implemented using Eclipse Vert.x framework. They use a Mongo database for storing data. The connection settings are injected into pods using Kubernetes Secrets.

public class MongoVerticle extends AbstractVerticle {

private static final Logger LOGGER = LoggerFactory.getLogger(MongoVerticle.class);

@Override

public void start() throws Exception {

ConfigStoreOptions envStore = new ConfigStoreOptions()

.setType("env")

.setConfig(new JsonObject().put("keys", new JsonArray().add("DATABASE_USER").add("DATABASE_PASSWORD").add("DATABASE_NAME")));

ConfigRetrieverOptions options = new ConfigRetrieverOptions().addStore(envStore);

ConfigRetriever retriever = ConfigRetriever.create(vertx, options);

retriever.getConfig(r -> {

String user = r.result().getString("DATABASE_USER");

String password = r.result().getString("DATABASE_PASSWORD");

String db = r.result().getString("DATABASE_NAME");

JsonObject config = new JsonObject();

LOGGER.info("Connecting {} using {}/{}", db, user, password);

config.put("connection_string", "mongodb://" + user + ":" + password + "@mongodb/" + db);

final MongoClient client = MongoClient.createShared(vertx, config);

final CustomerRepository service = new CustomerRepositoryImpl(client);

ProxyHelper.registerService(CustomerRepository.class, vertx, service, "customer-service");

});

}

}

MongoDB should be started on OpenShift before starting any applications, which connect to it. To achieve it we should insert a Mongo deployment resource into the Arquillian Cube configuration file as env.config.resource.name field.

The configuration of Arquillian Cube is visible below. We will use an existing namespace myproject, which has already granted the required privileges (see Step 1). We also need to pass the authentication token of user admin. You can collect it using command oc whoami -t after login to OpenShift cluster.

<extension qualifier="openshift">

<property name="namespace.use.current">true</property>

<property name="namespace.use.existing">myproject</property>

<property name="kubernetes.master">https://192.168.99.100:8443</property>

<property name="cube.auth.token">TYYccw6pfn7TXtH8bwhCyl2tppp5MBGq7UXenuZ0fZA</property>

<property name="env.config.resource.name">mongo-deployment.yaml</property>

</extension>

The communication between customer-service and account-service is realized by Vert.x WebClient. We will set read timeout for the client to 1 second. Because Istio injects 2 seconds delay into the route, the communication is going to end with a timeout.

public class AccountClient {

private static final Logger LOGGER = LoggerFactory.getLogger(AccountClient.class);

private Vertx vertx;

public AccountClient(Vertx vertx) {

this.vertx = vertx;

}

public AccountClient findCustomerAccounts(String customerId, Handler>> resultHandler) {

WebClient client = WebClient.create(vertx);

client.get(8095, "account-service", "/account/customer/" + customerId).timeout(1000).send(res2 -> {

if (res2.succeeded()) {

LOGGER.info("Response: {}", res2.result().bodyAsString());

List accounts = res2.result().bodyAsJsonArray().stream().map(it -> Json.decodeValue(it.toString(), Account.class)).collect(Collectors.toList());

resultHandler.handle(Future.succeededFuture(accounts));

} else {

resultHandler.handle(Future.succeededFuture(new ArrayList<>()));

}

});

return this;

}

}

The full code of sample applications is available on GitHub in the repository https://github.com/piomin/sample-vertx-kubernetes/tree/openshift-istio-tests.

5. Running tests with Arquillian Cube OpenShift extension

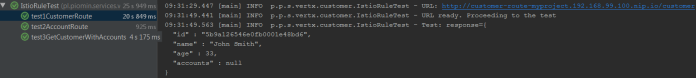

You can run the tests during Maven build or just using your IDE. As the first test1CustomerRoute test is executed. It adds a new customer and save generated id for two next tests.

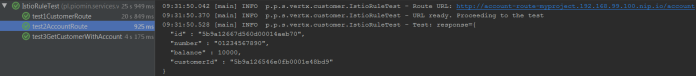

The next test is test2AccountRoute. It adds an account for the customer created during the previous test.

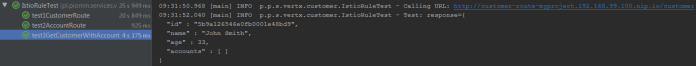

Finally, the test responsible for verifying communication between microservices is running. It verifies if the list of accounts is empty, what is a result of timeout in communication with account-service.

Leave a Reply